An electronic skin helps facilitate natural interactions between robots and humans.

Robots have come a long way in the last few decades, becoming more agile and interactive as a result of advancements made in sensing, mechanics, and movement. But autonomous robotic systems are still limited when it comes to functioning in unstructured, changing environments; say, for example, in environments where humans and robots need to work together.

One solution to this problem, according to a collaborative team of scientists from the University of Munich (TUM), Germany and Chalmers University of Technology in Gothenburg, Sweden, is to create responsive skins to help robots better navigate these situations.

Physical collaboration between humans and robots is more complicated than non-interactive or purely reactive scenarios. “Not only do [the robots] have to solve a collaborative task, but also comply with external forces applied by humans,” wrote the authors in their study. “For example, rehabilitation robots need to guide a patient’s limb and at the same time be compliant to prevent any injuries. Similarly, industrial manipulators need to react to the forces of their human co-workers and the surroundings to perform a collaborative assembly task.”

A lot of research is looking into enhancing these types of physical interactions, where a robot may be guided simply through human touch. “An important aspect of natural collaboration is the ability of the robot to feel contact forces — not just at its end-effector, but over a large area of its body,” said Professor Gordon Cheng, director of the Chair for Cognitive Systems, TUM. “For example, we might want to grasp the robot’s arm in order to guide it to a desired location, or we push against it to signal that we need more space.

“Unfortunately, the sense of touch is still very rare in today’s robotic platforms,” he added. “In our study, we wanted to investigate how information generated by a robotic skin could facilitate this collaboration between humans and machines.”

The robotic skin being referred to is a sensor network that spans the entire surface of the robot. “It is made out of small interconnected units called cells,” explained Cheng. “Each cell can measure different modalities such as force, proximity, vibrations, and temperature.

The team, led by Cheng, showed how this network can extend the capabilities of robots during dynamic interactions with humans by providing information about multiple contacts across its surface. The focus was on the integration of skin-based tasks that go beyond pure reaction, such as tactile guidance and force control. For example, preventing unforeseen contacts or in case of intentional contacts, control of the exchanged force/pressure with the environment.

This approach offers flexibility in integrating the skin into any standard industrial robot to make it compliant or interactive. “Automating every single step of a production line is impossible,” said Simon Armleder, one of the researchers at TUM. “Even if the processes are highly predictable, there could still be an array of unexpected circumstances that require human intervention. This is why we need machines that can work with humans in a reliable manner. These robots must have the ability to sense human presence and adapt their behaviors to guarantee safety and proper task execution.”

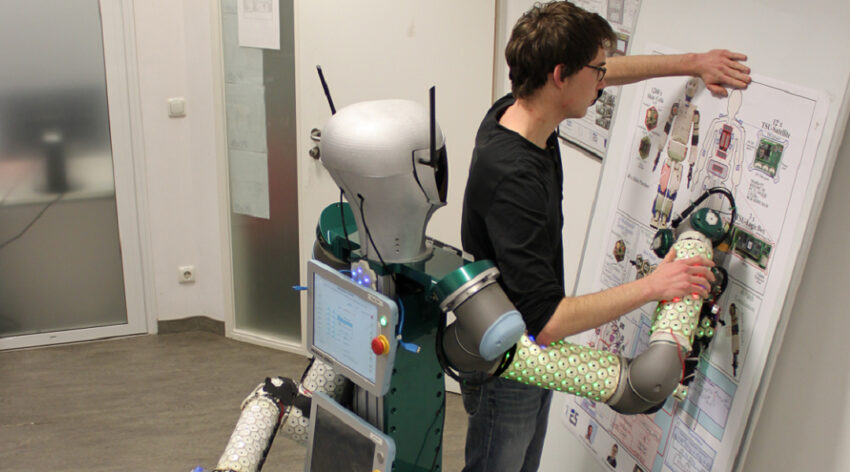

In a simplified scenario, the team had a robot helper assist a human partner in hanging a poster. The robot had to simultaneously interact with its partner, avoid colliding with anything in its environment, and apply pressure as needed to the poster to keep it on the wall.

“This is just one of many possible applications where multi-contacts interaction can enhance collaboration,” wrote the team. “Other scenarios [could include] carrying a heavy payload, handing over objects, [or] manipulating limp materials.”

“In the future, we expect more robots with better sensors located over the entire surfaces of their bodies, such as the whole-body robot skin we have developed,” said Cheng. “This will allow them to interact fluidly with their environment and human co-workers.”

Reference: Simon Armleder, et al., Interactive Force control based on Multi-Modal Robot Skin forphysical Human-Robot Collaboration, Advanced Intelligent Systems (2021). DOI: 10.1002/aisy.202100047