With the help of machine learning, a skin-like sensor internalizes different stimuli, allowing it to read and interpret hand movement.

Science has afforded us with many life-changing advancements. Vaccines to help protect against disease, 3D printers to help the blind visualize data, and the potential to harness limitless solar power from space.

Now, a team from Tsinghua University in Beijing have developed a self-powered sensor that can monitor and detect multiple environmental stimuli simultaneously and have applied it to effectively “translate” sign language into audio.

“We were inspired by the human skin, which can actively sense stimuli changes in the environment, including temperature, humidity, pressure, and light simultaneously,” explained Liangti Qu, a professor in the Department of Chemistry & Department of Mechanical Engineering at Tsinghua University and the study’s leader. “The fact that this perception process doesn’t need any external power supply makes it very different from currently available artificial sensors, just putting it in air.”

The sensor is made from graphene oxide, a material whose tunable electronic properties and regulated structure make it ideal for smart device applications. It is powered internally by a moist electric generator called MEG, which contains a membrane that spontaneously absorbs water from the air. When water adheres to the surface, this results in a higher concentration of hydrogen ions at the top of the membrane and a potential difference between its two electrodes.

“The sensor develops a positive and negative charge separation and potential after adsorbing water in air, like the spontaneously bioelectrical potential generation in human skin,” explained Qu. “When stimulated by ambient humidity, temperature, pressure, and light, the device produces electrical charges that induce a potential variation, producing distinct response signals to these stimuli.”

Machine learning helps translate sign language

The process is assisted by a machine learning module, which helps it to combine multiple external responses into a single signal that the device can then learn, store, and interpret.

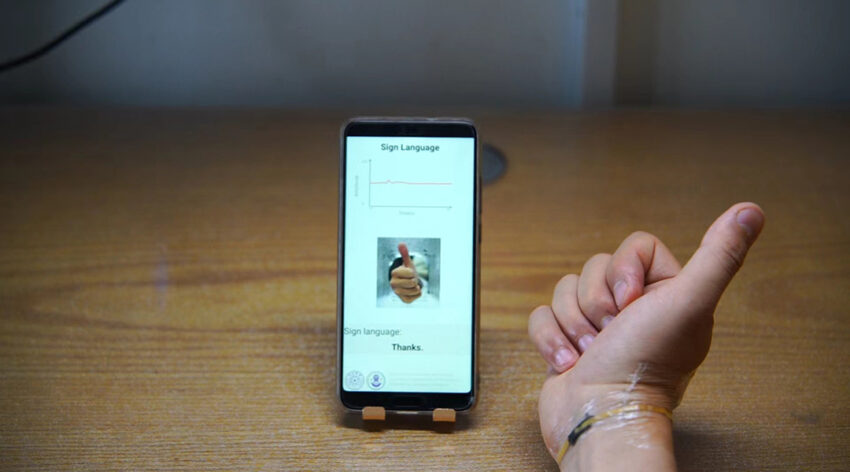

In the study, Qu and his colleagues Huhu Cheng and Ce Yang attached the sensor to a volunteer’s wrist and set about teaching the machine learning algorithm linked to the sensor to read and translate different finger and hand movements and associate them with different words and phrases.

“Different finger movements and hand gestures produce unique pressure stimulus sequences, which can be collected on a workstation via Bluetooth,” explained Cheng. “The machine learning model is able to extract features from moist-electric potential response signal sequences and predict the change in each stimulus at each time step based on the output of the LSTM network for that time step.

“By decoding and analyzing the received signal with a trained machine learning model, the classification of gestures based on pressure sequences is possible. The gesture command and the corresponding audio is then showed on a smartphone in an app we developed.”

Beyond this, the team foresee a number of applications, including integration into the Internet of Things, health monitoring, human-computer interactions, and a potable metaverse, to name but a few. However, the technology is still in its infancy and a few kinks need to be ironed out before widespread use.

“Environmental disturbances in complex application scenarios will cause noise in the response signal, which could be solved through the optimization of electrical signal processing or device performance,” said Qu. “We also need more training data. The distribution of the experimental data collected during tests and the actual application data will be different. We need to ensure the consistency of the training data and the test data.”

The future of multi-modal sensors appears bright, and with its development will likely come more life-changing advancements.

Reference: Liangti Qu, et al., A Machine-Learning-Enhanced Simultaneous and Multimodal Sensor Based on Moist-Electric Powered Graphene Oxide, Advanced Materials (2022). DOI: 10.1002/adma.202205249